There are three things that make the iPhone possible: cellular networks, lasers, and solid-state electronics and transistors. All these innovations were made possible with a significant contribution from fundamental research at the Bell Labs, but now these labs – along with many others – have closed. Where will future innovation come from?

Understanding how the landscape of corporate R&D has changed gives insights into better ways to harness today’s scientific ideas, says Professor Ashish Arora of Duke University in the paper Changing Structure of the American Innovation Ecosystem.

Fundamental research pioneered by corporate R&D

Speaking at the R&D Management Symposium 2020, Professor Arora argues that fundamental research pioneered by AT&T Bell Labs, Dupont, GE, IBM, Kodak and Xerox PARC from 1945 – 1985 has been the driver behind technological breakthroughs such as transistors, synthetic fibres, lasers and computer technologies, the benefits of which we are still seeing today.

He observes that the rise of corporate research was shaped by specific historical circumstances: growing markets, an emerging economy, weak universities and tremendous scientific and technical opportunities.

As many of those conditions no longer apply the system is changing. Looking to the future he warns that while the new innovation system has a great deal of promise there are also gaps – particularly in support for the ‘deep science’ that brings disruptive change and also in scale as the multi-disciplinary approach adopted by the big corporate labs is not reproducible in a university setting.

The rise of the in-house R&D powerhouse

Interestingly, Professor Arora points out that the current trend towards outsourcing research was also a feature of US firms before the two World Wars.

At this time corporations looked outwards for innovation, purchasing patents rather than developing them inhouse. They also brought in expertise from other disciplines, for example chemistry that transformed the steel industry.

US firms really only started to build internal capacity in R&D when anti-trust policies started to increase market power and it was apparent that growth would come from technological advance.

The post war period was the start of a golden age of corporate research. It was buoyed by world war successes like the atomic bomb, radar, penicillin and computer sciences and solidified the belief that new knowledge is a source of progress.

This was fed in the cold war arms race, where weapons and defence contractors put aside a small percentage of their current contract value to invest in basic science in order to remain on the cutting edge of the research frontier so they could bid on the next weapons system.

In parallel with this, America’s economy had become rich. With the automobile came increased demand for refined petroleum which in turn increased the feed stocks for inventions like paints, lacquers, synthetic fibre and plastics.

At AT&T, in common with other US corporations, there came the realisation that they had reached the technical frontier. There was increasing competition from overseas with technologies such as long-distance radar competing with telephone lines, and they believed that a fundamental understanding of the materials was needed to understand the phenomenon that they were dealing with.

Advancement of science

Given the weak state of American universities at that time (up to the 1930s leading research universities would still get their PhDs from the UK or Germany) they could not rely on universities to provide them with this fundamental knowledge and believed they have to do it themselves. This culminated in an AT&T scientist gaining a Nobel Prize in 1937 for his research in physics.

Scientific achievements created corporate pride in the labs. Willis Whitney, who led G’s research lab, is quoted as saying “I’m not just interested in practical results. The lab will of course have to pay for itself but in addition it should make a contribution to the advancement of science and knowledge.”

Professor Arora identifies that this is a key period when corporate philosophy changes, not only about how science is going to lead to profits but also it has a value in itself. The labs made significant and substantial contributions to advancing science. Bell labs alone had 9 Nobel prizes and 14 Nobel laureates for the research that was conducted in their labs.

Is corporate research less valuable?

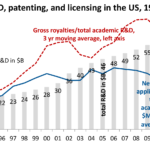

However, by the mid 1980s companies began to withdraw from corporate research. The share of basic and applied research in total business R&D fell from about 29% in 1990 to about 20% in 2010 and stayed there. Many of the large labs were closed.

This was reflected by the science output. According to research by Arora and his team, between 1980 and 1992 publications contributed positively to the value of the firm, after this time they added no value.

From this it would be expected that corporate research had become less valuable but that’s not the case. The percentage of US patents that cite science and engineering articles has increased quite dramatically – implying that science continues to be ever more useful in invention. Patents have also become more influential in valuations, firms with twice the patent stock are 2% more valuable on the stock market (holding everything else constant).

The conclusion is that although science continues to be useful in terms of contributing to invention its private value has fallen. Of course, there are exceptions, and one of these is AI, with companies that contribute to papers increasing their value.

There are many theories to why companies have withdrawn from inhouse research and many of these are conflicting.

New innovation ecosystem

Professor Arora connects the decline in research by corporate labs to changes in the Innovation ecosystem. Suggesting that we are seeing a return to organisations concentrating on their core competences and outsourcing research. With a new division of innovative labour between universities and start-ups.

According to R&D Magazine, which makes awards for the top 100 innovations, in 1971 fewer than 20% were collaborations, by 2006 this had increased to 66%.

Universities are now well funded and playing an increasingly important role not just in producing knowledge but also indirectly trying to monetarise it. With venture capital playing an increasingly important role in getting spinouts to scale.

By 2011 US corporations earned 111 billion through licensing revenue while expenditure on basic and applied research was 47 billion.

Professor Arora comments that there are many reasons why this change to greater use of outside sources is good. Rather than independent inventors we are seeing a new generation of university spinouts and early-stage technology companies becoming established; creating diversity. Economist Adam Smith identified that the key to countries becoming rich is specialisation and division of labour.

What are we missing in the new ecosystem?

However the question raised by Professor Arora is ‘what are we missing’. He sees that there are two important implications:

Direction of research, investment in Deep Tech falls – this would cover areas such as quantum computing, new semiconducting materials, energy storage, biofuels etc. The new innovation system seems to be particularly good for software and lifesciences, arguably areas that attract VC funding, but less well in the areas of science that return benefits on longer lifecycles. In parallel, US Federal government has also stepped back from long term blue-sky research and focussed more on applied.

Impact, corporate research is mission orientated – corporate research is often mission orientated and architectural in scope. It is often multidisciplinary and on a scale that is not reproducible at scale in a university lab.

Take for example the development by Google of Google Translate. This required a multi-disciplinary team of linguists, machine learning experts, statisticians, software engineers and dedicated microprocessor devices. The influential Google Brains ‘cat paper’ Google’s neural machine translation system: bridging the gap between human and machine translation. Had 32 authors and used a data set of 3 million images and 375 million labels ( The public data set released by Stanford ImageNet has I million labelled images.

Filling the gaps to ensure prosperity

Talking about the US, Professor Arora says that:

Government needs to provide more support for hard science in disciplines such as AI, materials, energy and bioengineering and enact policies that will create demand and give a clear signal of support to the entrepreneurs and investors that are so important in this innovation system.

Universities need to understand that their mission has to include better links to translation for their research to make any impact, not just simply to produce paper. Part of that mission is to provide better career tracks for aspiring graduates and post grads that want to transform the world.

Corporations must use more imagination on how they can manage research alongside smaller organisations.

And lastly, we need to embrace as a society the belief that progress come from greater scientific advance, that’s the way forward, he concludes.

Professor Ashish Arora, professor at Dale University and NBER, presented “The Changing Structure of American Innovation Ecosystem’ at the R&D Management Symposium 2020. He drew from joint work with colleagues: Professor Sharon Belenzon, Lia Sheer, JK Shuh of Duke University and Andrea Patacconi of Norwich Business School.

Visit Ashish’s LinkedIn profile: https://www.linkedin.com/in/ashish-arora-b7a2bb1/

Visit Ashish’s Twitter profile: https://twitter.com/profaroraashish